Recently, Adobe dropped a doozy of a teaser for multiple new AI-enhanced tools that are coming to Premiere Pro “later this year.” And the internet is already buzzing with speculations about when these generative AI tools would actually drop and what they might mean for video editors and the creative industry as a whole. Personally? I’m excited by the announcement and the power of the tools that are soon to simplify many of my current workflows.

A (brief) history of Premiere Pro AI tools

It’s worth remembering that this plunge into generative AI is hardly Adobe’s first swing at providing AI enhancements to its users. Adobe has been researching and developing AI tools for years under its Adobe Sensei platform. Even if you have never heard of Sensei, you’ve likely seen its integrations across Creative Cloud applications. In Premiere Pro alone, we’ve seen AI tools that have been powering my editing workflows for some time.

Remix

I’ll admit that I was late to the Remix game, but I now use this tool religiously. Fitting music to an edit can be a tedious task and the remix tool simplifies extending repeating stanzas in music cues and even provides a quick way for extending notes to create ringouts.

Morph Cut

This special transition is perfect for getting rid of the “ums”, “uhhs” and long pauses during interviews. So long as the A and B frames of an edit are relatively similar, using Morph Cut will seem to stitch the cut together like it’s not even there.

Scene Edit Detection

This nifty function, that’s easily accessed in a timeline, will automatically break up long videos, like B-roll stringouts, into their individual clips. It provides the option of creating edits, adding individual subclips to a bin, and adding markers at scene changes.

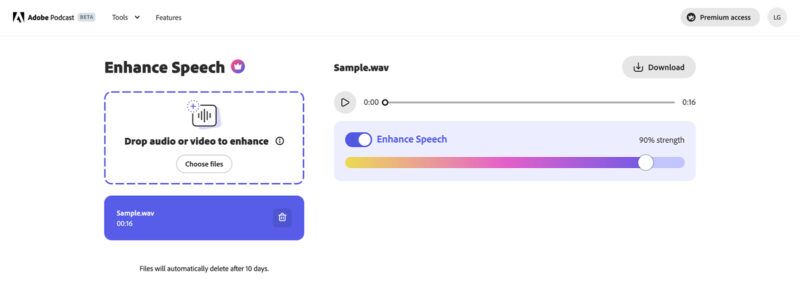

Enhance Speech

One of the newcomers to Premiere’s robust toolset, Enhance Speech takes the stunning tech from Adobe Podcast and bakes it into Premiere’s Essential Audio Panel. Easily bring up the production value of dialog by denoising and EQ-ing with one simple click.

Speech to Text and Text-Based Editing

Automatic transcription and text-based editing were a phenomenal addition to Premiere Pro in 2023 that made editing long interviews much quicker and addressing script related notes easier to navigate across a timeline.

The new Premiere Pro AI tools

The relatively recent explosion of generative AI opened a world of new possibilities for creatives, especially editors. Since last year, when Photoshop was graced with generative fill and object removal super powers, the post-production community knew it was only a matter of time before Adobe pushed these enhancements through to its video applications. Yes, with the right types of footage and a few tricks, it was possible to leverage Photoshop’s genfill abilities inside of Premiere Pro—video below—but the process wasn’t as fluid as we all knew it could be.

Previously, you’d need to shoehorn Photoshop’s gen-AI tools into Premiere Pro.

Now the time is near and I’m pumped to try these new Premiere Pro AI tools. Below are the upcoming additions in the order that I’m most excited about.

Smart Masking

If you have used Photoshop at any time in the last four years, there’s a good chance you’ve encountered the Object Selection Tool. This tool appears to work magic as you move it across an image, temporarily highlighting individual elements that Photoshop thinks you might want to select. One click instantly selects the image.

Smart Masking in Premiere Pro looks to work similarly—though it might be more akin to After Effects’ Roto Brush 3.0. Likely the final result inside of Premiere will land somewhere in between the Photoshop and After Effects tools. But the bottom line here is that it should allow easy masking and tracking objects without leaving Premiere Pro. Not just a time saver, but also something that brings compositing tools right into your timeline. Speaking of which…

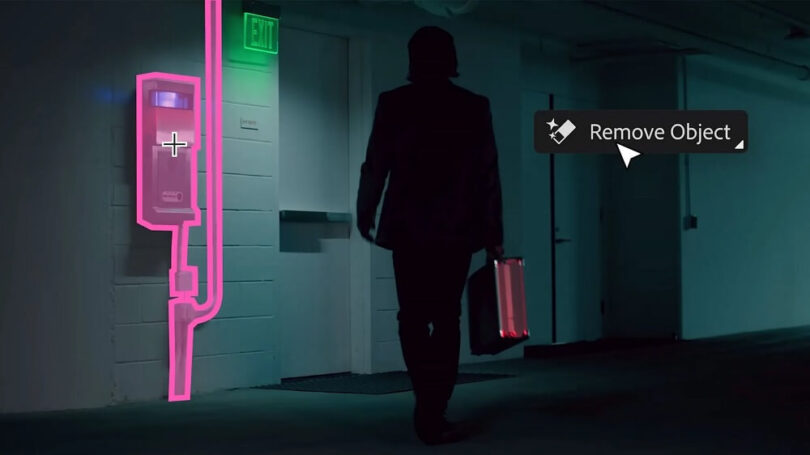

Object Removal

This is huge. It’s not uncommon for things to mistakenly be left in frame while shooting. Seriously, I can’t count the number of boom mics that I’ve comped out of interviews over my career. Though basic compositing tools and methods in Premiere Pro have been around for some time to remove unwanted objects, none are as advanced, easy, or fast as what was demoed in the teaser video.

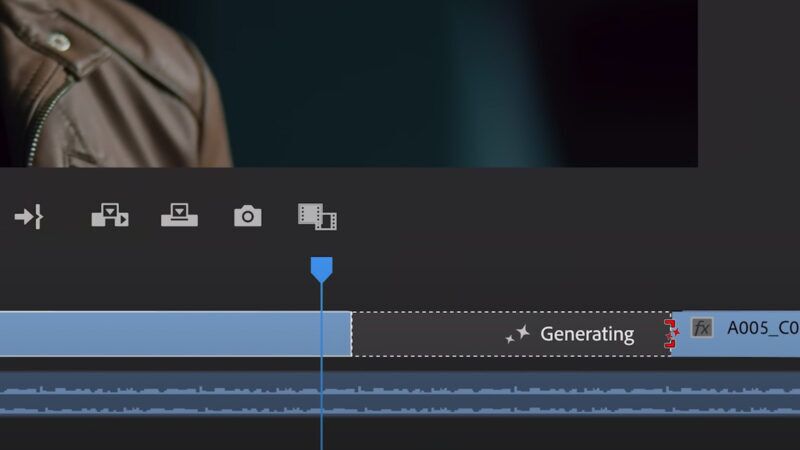

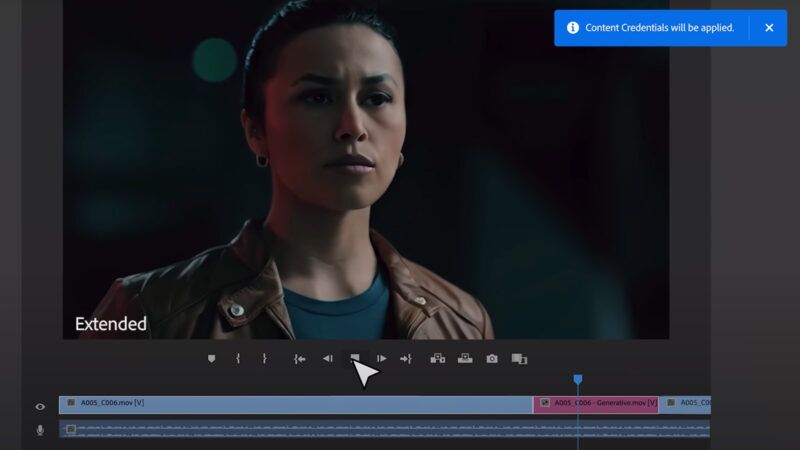

Generative Extend

I cut a lot of docu-style branded content that utilizes interviews to move the narrative. In many instances a subject’s face may need to stay on screen for a longer amount of time than the source material will allow. Maybe the subject blinks, keeps talking, sneezes, really any number of things could happen.

The solution that I’ve used for years, with mixed success, is slowing the last portion of the interview down to about 60% and changing the clip’s interpolation to optical flow. In most cases it’s not noticeable, but does take a number of steps to accomplish and can sometimes lead to strange results.

The new Generative Extend AI tool could eliminate that workaround process entirely and work for a lot of shots, not only interviews. The end result would help editors continue to dictate an edit’s pace while using ideal shots that may have been unfortunately clipped short and otherwise made unusable.

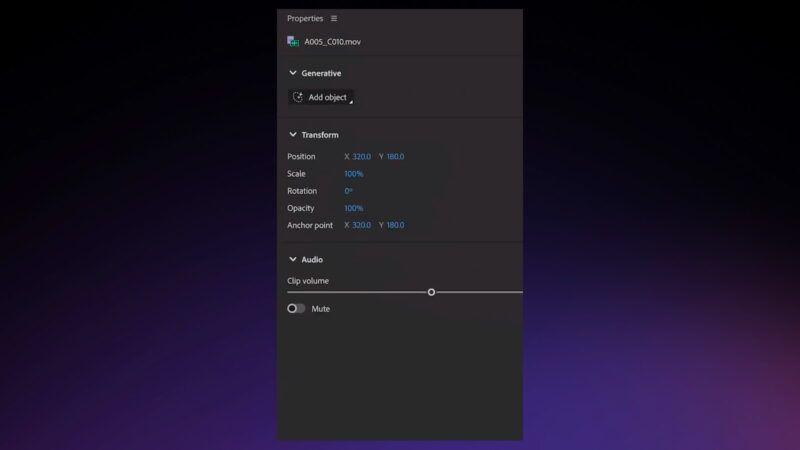

Object Addition

Adding objects found its way towards the bottom of my list only because I tend to want items in a shot removed more often than added. Still, the ability to use generative AI to swap an actor’s clothing or change a watch face, for example, has a lot of validity—especially if Adobe cracks the code on how to direct specific design elements of the generated content.

What I’m most curious about though is if object addition could work similarly to generative fill in Photoshop for extending the boundaries of a video frame? We’ve all been in situations where a source file, like an interview, is cropped too tight for our taste. Imagine how nice it would be to add a little extra breathing room to the top, bottom, or sides of your video even while the camera is moving. Maybe even replace the cropped borders of a Warp-Stabilized shot? Currently, that’s tedious at best, impossible at worst.

Generating Video

In a similar vein to object addition, the teased ability to generate new clips right on the timeline is enticing. It may not get used regularly in my workflow, but sometimes, you just need that one extra clip. It does have the potential to save large swaths of time searching for the perfect stock footage shot in that respect. Like most, I see this eating away at the stock footage industry, but hopefully that encourages stock providers to double down on upping their production quality.

Third-party AI integrations

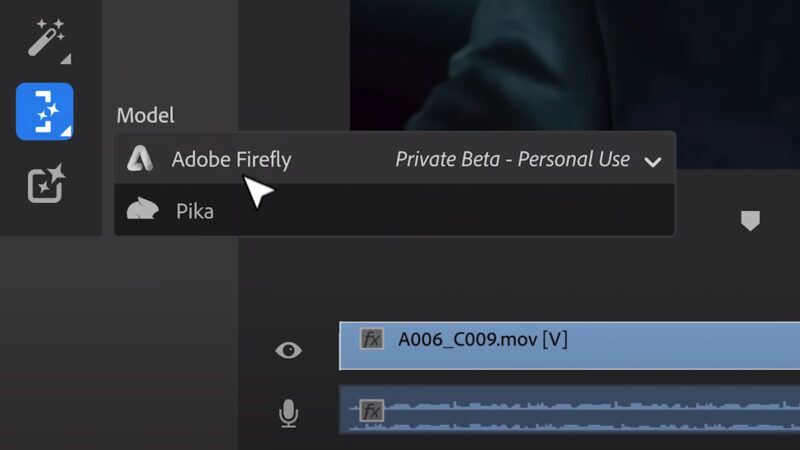

Everything is better with friends, right? Adobe seems to agree. Between OpenAI, PikaLabs, Runway, and Firefly, one of the biggest talking points around generative AI over the last year has been whose model is best? The truth of the matter is that they all seem to have strengths and weaknesses, not to mention that end users are already developing personal favorites.

Adobe sees a future in which thousands of specialized models emerge, each strong in their own niche. Adobe’s decades of experience with AI shows that AI-generated content is most useful when it’s a natural part of what you do every day.

Adobe has clearly recognized this as well and showed that rather than having one model to rule them all, it hopes to let users pick between models at their discretion when using these new generative AI tools directly inside of Premiere. I’m certain that the hurdles for this potential collaborative function are huge, but I truly love seeing Adobe take the lead at solving the challenge.

Transparency through Content Credentials

In my opinion, we can’t discuss AI without addressing the elephant in the room. AI has opened a Pandora’s box regarding reality versus fiction and intellectual property. That said, I’ll leave those debates to the corners of the internet dedicated to such things. From my perspective, it’s good to see that Adobe again is leading the industry by putting forth an idea to help mitigate concerns over generated content.

By way of the Content Authenticity Initiative, the company has pledged to attach Content Credentials—an open-source nutrition label of sorts—to generative AI assets produced in its Creative Cloud applications. Users will be able to see how content was made, and even which specific AI models were used for generation. It’s a big step in the right direction for generative AI as it reaches across the creative industry.

Non-AI features, I see you

The post-production nerd that I am, what may be just as exciting as the new AI features are the UI enhancements also seen in the teaser. The demo video is clearly a concept, but it points to the direction that Adobe intends to go and in that direction there looks to be a new properties panel.

Properties Panel

The properties panel looks like a close cousin to the After Effects addition that has become beloved by many motion designers. How this would relate to the Effect Controls panel is anyone’s guess, but I can see a world where they would work in tandem for setting and manipulating keyframes. Another possibility is that Effect Controls would become a subsection of the Properties panel.

Conclusion

As no stranger to AI filmmaking, I’m keenly aware that what we’re seeing now is only the tip of the rocket that’s going to blast the role of an editor to new stratospheres. Responsibilities will shift and the stack of hats that editors routinely wear continues to grow a little taller.

If you look at the history of editing, that’s honestly nothing new. Editors have constantly evolved alongside technology since first slicing and dicing on Moviolas. As the industry evolves, so shall we. These new AI tools will make the tedious tasks that once detracted from creative pursuits even easier to overcome. And I’m here for it.